DeepSeek Claims R1 Update Matches ChatGPT o3 and Gemini 2.5 Pro, DeepSeek has unveiled its upgraded model — DeepSeek R1–0528, a significant enhancement over its previous version. With a strong emphasis on advanced math, coding, and logical reasoning, the new model directly challenges leading AI systems like OpenAI’s ChatGPT o3 and Google’s Gemini 2.5 Pro. This update isn’t just a marginal improvement; it brings substantial gains across critical benchmarks, particularly in competitive math problem solving and real-world coding tasks.

The model is now publicly available on Hugging Face, making it accessible for developers, researchers, and enterprises seeking open-weight alternatives with top-tier capabilities.

Overview of DeepSeek R1–0528

In May 2025, Chinese AI startup DeepSeek introduced an upgraded version of its R1 reasoning model, named DeepSeek R1–0528. This update, available on the Hugging Face platform, marks a significant stride in AI development, particularly in mathematics, coding, and logical reasoning. The R1–0528 model builds upon its predecessor by leveraging increased computational resources and advanced post-training optimizations, resulting in enhanced performance across various benchmarks.

According to DeepSeek, the upgraded model — DeepSeek-R1-0528 — has “significantly improved its depth of reasoning and inference capabilities.” The startup said the model’s overall performance is now “approaching that of leading models, such as O3 and Gemini 2.5 Pro.”

“Compared to the previous version, the upgraded model shows significant improvements in handling complex reasoning tasks,” DeepSeek adds in its post.

DeepSeek says that besides being good at problem solving and reasoning, the upgraded R1 or R1-0528 also hallucinates less. The model now also apparently offers a “better experience for vibe coding”.

Benchmark Results

DeepSeek R1–0528 has demonstrated impressive results in various benchmark evaluations, highlighting its enhanced capabilities:

- Mathematics: The model achieved a 91.4% pass rate on the AIME 2024 benchmark, a significant improvement from the original R1’s 79.8%. Similarly, on the AIME 2025 test, accuracy increased from 70.0% to 87.5%.

- Coding: In the LiveCodeBench evaluation, R1–0528 scored 73.3%, up from the original’s 63.5%. The model also improved its performance on the Codeforces-Div1 benchmark, with ratings increasing from 1530 to 1930.

- Logical Reasoning: On the GPQA-Diamond benchmark, R1–0528 achieved an 81.0% pass rate, compared to the original’s 71.5%. The model also showed improvements in the HMMT 2025 test, with scores rising from 41.7% to 79.4%.

These enhancements position DeepSeek R1–0528 as a strong contender in the AI landscape, narrowing the performance gap with leading models from OpenAI and Google.

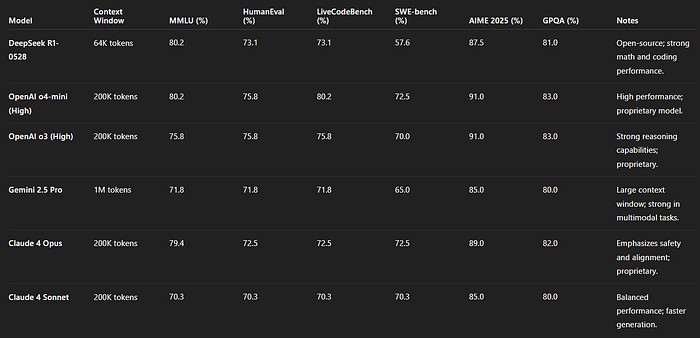

How does DeepSeek R1–0528 fare against other state-of-the-art LLMs?

Below is a summary of comparisons drawn from available official benchmarks and early real-world tests:

Comprehensive Benchmark Comparison of Leading AI Models: DeepSeek R1–0528, OpenAI o3 & o4-mini, Gemini 2.5 Pro, and Claude 4 Variants

Google Gemini 2.5 Pro (Preview)- Gemini 2.5 Pro is Google DeepMind’s latest flagship model, known for strong coding and reasoning. DeepSeek R1–0528 is now in the same league: on composite evaluations, R1–0528’s median score slightly exceeds that of Gemini 2.5 Pro. In direct benchmarks, the two are often very close- e.g. on a general QA test (GPQA) Gemini scored ~84% vs DeepSeek ~81%. On coding benchmarks like Codeforces and Aider-Polyglot, R1–0528 actually matches or edges out Gemini’s performance.

Anthropic Claude 4 (Opus & Sonnet 4) by Anthropic comes in versions like Sonnet (100k-token context with “extended thinking”) and Opus (an iteration with refined alignment). DeepSeek R1–0528’s performance is in the same ballpark as Claude 4 on most benchmarks.

In the Aider-Polyglot coding challenge, R1–0528 scored 71.6% accuracy, actually beating claude-sonnet-4–20250514 (32k thinking) (61.3%).

An independent review by @ArtificialAnlys showed 8 points increse in R1.2 performance in their “intelligence” index over the original R1. Just two points below O3 and o4-mini-high.

Across the board, independent testers have found R1–0528 to bridge much of the gap between open models and the closed leaders, all while maintaining the flexibility and low cost of an open model.

DeepSeek R1–0528 vs Original R1: What’s Changed?

The transition from the original R1 to R1–0528 encompasses several key improvements:

-

Enhanced Reasoning: R1–0528 exhibits deeper reasoning capabilities, allowing it to tackle more complex tasks with greater accuracy.

-

Reduced Hallucinations: The updated model has a lower tendency to generate incorrect or nonsensical information, enhancing its reliability.

-

System Prompt Integration: Unlike its predecessor, R1–0528 supports system prompts without requiring special tokens, streamlining its deployment for developers.

-

Improved Coding Assistance: With fortified programming capabilities, R1–0528 offers more robust support for coding tasks, making it a valuable tool for developers.

These advancements reflect DeepSeek’s dedication to refining its AI models to meet the evolving needs of users and industries.

Final Thoughts

DeepSeek’s R1–0528 update signifies a notable advancement in AI development, showcasing the company’s ability to enhance reasoning, coding, and logical capabilities effectively. By achieving performance levels comparable to leading models like ChatGPT o3 and Gemini 2.5 Pro, DeepSeek positions itself as a significant player in the AI arena.

As AI technology continues to evolve, DeepSeek’s commitment to open-source development and continuous improvement suggests a promising trajectory. The R1–0528 model not only demonstrates technical prowess but also contributes to the broader AI community by providing accessible and powerful tools for various applications.

For more posts visit buzz4ai.in

[…] which it claims is more efficient than closed-source competitors in China and outperforms DeepSeek’s latest R1-0528 model in several benchmarks. The M1 model supports a context length of one million tokens—eight times […]