Google I/O 2025 Highlights, Google I/O 2025 was dedicated to AI.

At its annual developer conference, Google announced updates that put more AI into Search, Gmail, and Chrome. Its AI models were updated to be better at making images, taking actions, and writing code.

Google also previewed some big swings for the future. Plans to revamp video calls, make a more aware and conversational assistant, and partner with traditional glasses companies on smart glasses.

Image Credit : Future

Image Credit : Future

Here’s a quick summary of what Google’s likely to talk about during the Google I/O keynote.

Google I/O 2025: How to watch

The Google I/O keynote gets started at 1 p.m. ET / 10 a.m. PT / 6 p.m. BST on Tuesday. You can watch from theGoogle I/O website or from aYouTube live stream that we’ve embedded here.

In addition to the regular keynote, a developer keynotefollows at 4:30 p.m. ET / 1:30 p.m. PT / 9:30 p.m. BST that will also stream on the I/O website. This figures to be a deeper dive into the main topics from the first keynote, plus more developer-centric news.

After 2 hours, Google I/O 2025 is finally at a close. There’s been a lot of announcements regarding its AI ambitions with new models for Gemini, but the biggest reveal has to be Android XR smart glasses. Stay tuned for our in-depth reporting on the biggest news.

Google Made an AI Coding Tool Specifically for UI Design

Google is launching a new generative AI tool that helps developers swiftly turn rough UI ideas into functional, app-ready designs. The Gemini 2.5 Pro-powered “Stitch”experiment is available on Google Labs and can turn text prompts and reference images into “complex UI designs and frontend code in minutes,” according to the announcement during Google’s I/O event, sparing developers from manually creating design elements and then programming around them.

These three examples provided by Google show what Stitch is capable of generating. | Image Credit : Google

These three examples provided by Google show what Stitch is capable of generating. | Image Credit : Google

Stitch generates a visual interface based on selected themes and natural language descriptions, which are currently supported in English. Developers can provide details they would like to see in the final design, such as color palettes or the user experience. Visual references can also be uploaded to guide what Stitch generates, including wireframes, rough sketches, and screenshots of other UI designs.

Android XR smart glasses reveal leaves more to be desired

Image Credit : Google

Image Credit : Google

Google took the wraps off its Android XR powered smart glasses, which we have to admit looks like an ordinary pair of glasses. It even showed off some practical demos to the live audience, but it was a short reveal that leaves more to be desired.

This is officially the second Android XR device since the platform was launched last December. The first is Samsung’s Project Moohan, but that’s an XR headset more in the vein of the Apple Vision Pro. Project Aura, however, is firmly in the camp of Xreal’s other gadgets. The technically accurate term would be “optical see-through XR” device. More colloquially, it’s a pair of immersive smart glasses.

Native audio output with Gemini Live

Image Credit : Google

Image Credit : Google

Gemini Flash and Pro shows off how Gemini Live can speak in different tones and languages, like being able to switch intermittently between them, to have a more natural conversational tone.

Google is bringing an ‘Agent Mode’ to the Gemini app

Google is bringing an “Agent Mode” to the Gemini app and making some updates to its Project Mariner tool, CEO Sundar Pichai announced at Google I/O 2025.

Image Credit : Google

Image Credit : Google

With Agent Mode in the Gemini app, you’ll be able to give it a task to complete and the tool will go off and do it on your behalf, like with other AI agents. Pichai discussed an example of two people looking for an apartment in Austin, Texas. He said that the agent can find listings from sites like Zillow and use Project Mariner when needed to adjust specific filters.

Gemini 2.5 Flash Upgraded Version

Image Credit : Google

Image Credit : Google

Google I/O 2025 Opening Film

Google’s AI-generated opening video for I/O used a new video generation model, Veo 3, according to the description of the video. The video looked a little rough, if you ask me.

Project Astra

Google’s low latency, multimodal AI experience, Project Astra, will power an array of new experiences in Search, the Gemini AI app, and products from third-party developers.

Image Credit:Google

Image Credit:Google

Project Astra was born out of Google DeepMind as a way to showcase nearly real-time, multimodal AI capabilities. The company says it’s now building those Project Astra glasses with partners including Samsung and Warby Parker, but the company doesn’t have a set launch date yet.

Another cool video demo Google shows off is how Project Astra makes it easier to do research for you, like figuring out how to do some bike work. Gemini does the research and can guide you with instructions, plus it can apparently make phone calls to bike shops about specific parts that are needed.

Image Credit : Google

Image Credit : Google

At Google I/O, they showed Project Astra running on a Pixel 9 pro, The user asks for a bike repair manual, and Astra finds a PDF, opens it, and scrolls to the right page. Then it opens YouTube, finds a tutorial, and plays the video. It searches Gmail for an old message and highlights the right bike part in a pile of parts.

Astra also makes a phone call to a bike shop, asks if a part is in stock, and confirms the order later. When someone interrupts the session, Astra pauses. The user asks to continue, and the conversation picks up where it left off. Later, when the user asks for a bike basket that fits their dog, Astra remembers the dog’s name.

Google calls this “Action Intelligence,” and it combines memory, screen control, live conversation, and phone calls. This isn’t just a voice assistant. It’s a real-time agent with a full view of your screen and surroundings.Must Watch the below video of this.

Veo 3 video generator can now create sounds and voices in video

Image Credit:Google

Image Credit:Google

Google claims that Veo 3 can generate sound effects, background noises, and even dialogue to accompany the videos it creates. Veo 3 also improves upon its predecessor, Veo 2, in terms of the quality of footage it can generate, Google says.

Veo 3 is available beginning Tuesday in Google’s Gemini chatbot app for subscribers to Google’s $249.99-per-month AI Ultra plan, where it can be prompted with text or an image.

Imagen 4 AI image generator – Realistic and detailed images

According to Google, Imagen 4 is fast — faster than Imagen 3. And it’ll soon get faster. In the near future, Google plans to release a variant of Imagen 4 that’s up to 10x quicker than Imagen 3.

Imagen 4 is capable of rendering “fine details” like fabrics, water droplets, and animal fur, according to Google. It can handle both photorealistic and abstract styles, creating images in a range of aspect ratios and up to 2K resolution.

A sample from Imagen 4 | Image Credit : Google

A sample from Imagen 4 | Image Credit : Google

Both Veo 3 and Imagen 4 will be used to power Flow, the company’s AI-powered video tool geared towards filmmaking.

AI Mode is obviously the future of Google Search

So far, AI Mode is a tab in Search. But it’s also beginning to overtake Search.| Image Credit : Google

So far, AI Mode is a tab in Search. But it’s also beginning to overtake Search.| Image Credit : Google

Gemini models are going to be integrated with Google Search. It’s going to add intelligence with AI Mode, a new powerful AI search that will help answer questions. It’s a new tab within search and will also be included with AI overviews.

For now, AI Mode is just an option inside of Google Search. But that might not last. At its I/O developer conference on May 20th, Google announced that it is rolling AI Mode out to all Google users in the US, as well as adding several new features to the platform. In an interview ahead of the conference, the folks in charge of Search at Google made it very clear that if you want to see the future of the internet’s most important search engine, then all you need to do is tab over to AI Mode.

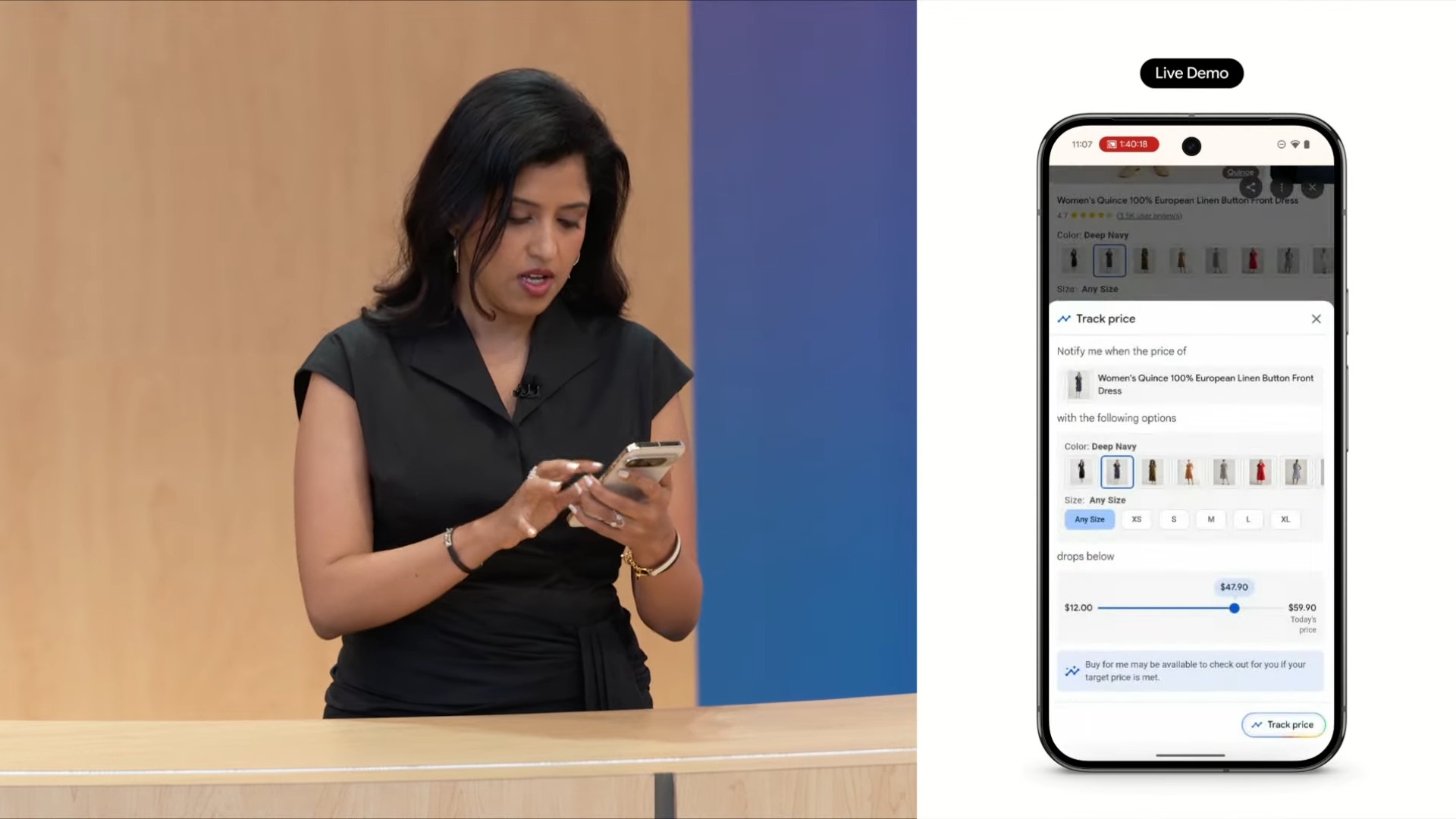

Google will let you ‘try on’ clothes with AI

Image Credit : Google

Image Credit : Google

A new try it on image generation model with search specifically works on creating images for fashion and clothes you want to wear, complete with diffusion models that can tailor the image for different fabrics.

This new tool will also continually check web sites to keep a watch out on their price, especially when it drops and it’ll send you a notification.The new feature is rolling out in Search Labs in the US today. Once you opt into the experiment, you can check it out by selecting the “try it on” button next to pants, shirts, dresses, and skirts that appear in Google’s search results. Google will then ask for a full-length photo, which the company will use to generate an image of you wearing the piece of clothing you’re shopping for. You can save and share the images.

Google Beam is an AI-first video conferencing platform

Image Credit : Google

Image Credit : Google

Google’s futuristic conference-calling experiment has been threatening to become commercially available for a while, and Sundar Pichai just said it’s coming this year through devices from HP. And to be fair, “Google Beam” is a less fun but much more Google-y name than Starline.

Google Beam that aims to transform video conferencing using an array of cameras. The first Google Beam devices will be coming soon, but no price has been revealed.

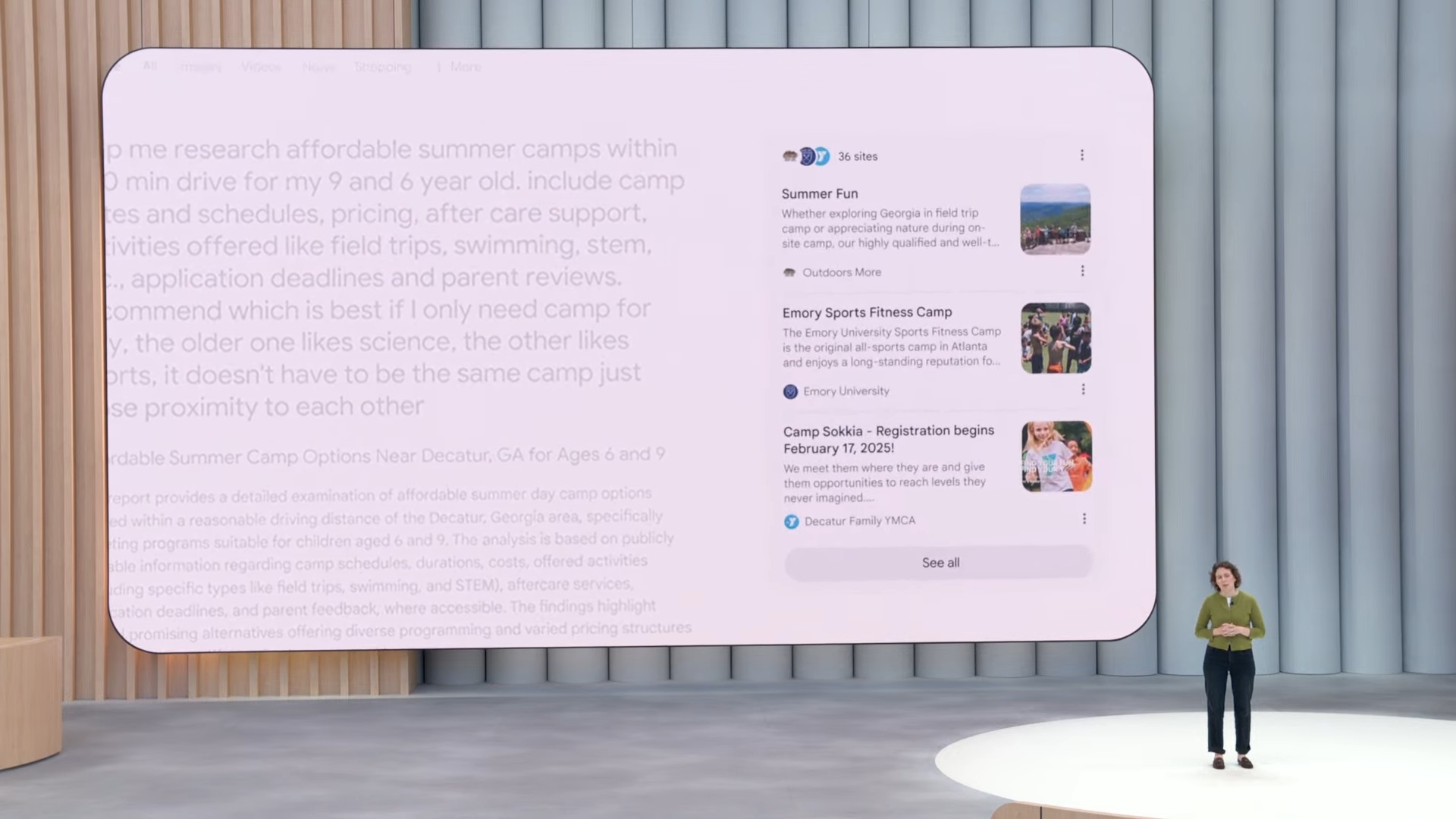

DeepSearch will deliver more more relevant content with Search

Deep Think is an “enhanced” reasoning mode for Google’s flagship Gemini 2.5 Pro model. It allows the model to consider multiple answers to questions before responding, boosting its performance on certain benchmarks.

Image Credit : Google

Image Credit : Google

Deep Research is coming to AI Mode, which Google is calling DeepSearch. This model will better curate research topics with logical search results and hyper relevant content.

The latest on WearOS 6

Image Credit : Google

Image Credit : Google

Android may have gotten the focus at last week’s Android Show event, but Google has other software platforms, and WearOS is getting some changes, too. Google’s smartwatch software will soon be updated, with WearOS 6 boasting a number of noteworthy changes.

- The same Material 3 Expressive design coming to Android 16 is also part of WearOS 6, with scrolling animations that match the curved display of Google’s watches and shape-morphing elements that adopt to the watch’s screen size.

- Full-width glanceable buttons stretch across the width of the watch display to make them easier to tap.

- As part of Google’s push to extend Gemini beyond smartphones, the smart assistant is coming to WearOS so you can now use natural language queries to interact with your watch.

- A 10% improvement in battery life is coming thanks to behind-the-scenes changes.

- Dynamic color theming extends the color of the watch face across the WearOS interface.

Gemini Diffusion – A faster, more efficient AI research model

Gemini Diffusion is coming to text with a new research model. This new model is much more efficient and faster at answering prompts.Gemini Diffusion’s external benchmark performance is comparable to much larger models, whilst also being faster.

Image Credit : Google

Image Credit : Google

Search Live uses your camera to answer questions

Google announced two new ways to access its AI-powered “Live” mode, which lets users search for and ask about anything they can point their camera at. The feature will arrive in Google Search as part of its expanded AI Mode and is also coming to the Gemini app on iOS, having been available in Gemini on Android for around a month.

Image Credit : Google

Image Credit : Google

Search Live uses Project Astra with AI Mode, so you can use your phone’s camera to delivering meaningful search results. It’s a new way to perform searches with the camera, rather than typing it all out.

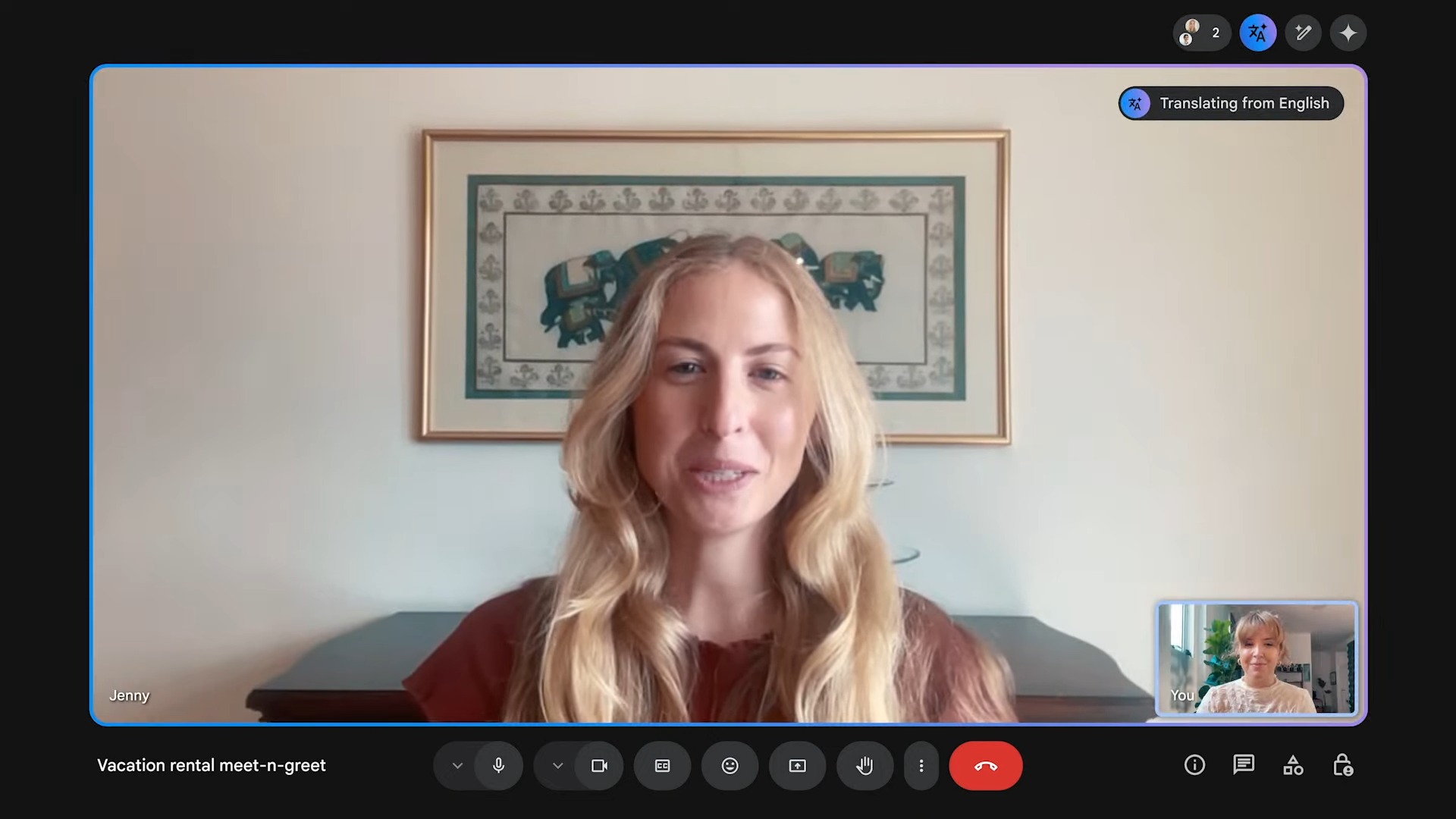

Google Meet gains live translation

Google Meet will have the ability to translate languages for a more intuitive conversation between two people., making communication more inclusive for global teams. Users can enable and choose their preferred language for translated captions within the meeting settings. This feature is accessible on both computer and mobile devices.

Image Credit : Google

Image Credit : Google

A quick demo on stage shows how it can be used between two people to communicate, despite speaking different languages.

Looking ahead to I/O

Earlier I posted a Google I/O 2025 event preview, which covers in a little more depth some of the things we’re likely to see during the keynote. But I also wrote about the show’s heavy focus on AI this year — at least, from all outward signs — and why that’s leaving me a little wary.

Image credit: Glenn Chapman/AFP via Getty Images

Image credit: Glenn Chapman/AFP via Getty Images

It’s not really Google’s fault. But with all the hype around AI these days, I’m feeling kind of tired of hearing big promises without seeing real benefits in my daily life. Some features have looked cool, but they haven’t actually changed how I use my tech every day in a meaningful way.

I’m not asking for a major breakthrough at Google I/O. I just hope Google spends more time explaining how these new AI tools can actually help me, instead of only showing off flashy demos that look impressive but feel far away from real use.

Final Thoughts

Google I/O 2025 made it crystal clear that AI isn’t just a side feature — it’s the foundation of Google’s entire ecosystem moving forward. From advanced coding tools and Search transformations to proactive digital assistants and premium AI access, the company is reshaping how we interact with technology.

With projects like Astra, Mariner, Agent Mode, and Imagen 4, users can expect a more intelligent, visual, and helpful experience across every Google product. Whether you’re a developer, content creator, or everyday user — the AI revolution is here, and Google is leading the charge.

For more updates visit buzz4ai.in

[…] launched by Google I/O 2025 developer conference, an innovative AI-driven tool designed to revolutionize the way developers and designers create […]

[…] 3, At Google I/O 2025, Google unveiled Veo 3, its latest AI-powered video generation model that promises to revolutionize […]

[…] out to all users in the US to start with. The announcement came during the opening keynote of Google’s annual I/O developer conference held in Mountain View, California on Tuesday, May 20, and focused on showing off the company’s latest advancements in AI, Android, augmented reality, […]

[…] has unveiled its latest advancement in AI-driven image generation: Imagen 4, at I/o 2025 developer conference. This new iteration boasts remarkable clarity, precision, and fine details, setting a new standard […]

[…] XR Glasses, At Google I/O 2025, Google introduced its latest innovation in wearable technology: the Android XR smart glasses, […]

[…] showed up in Apple’s ecosystem in October 2024, and it’s here to stay as Apple competes with Google, OpenAI, Anthropic, and others to build the best AI […]