Step-by-Step Guide on Building AI Agents for Beginners, Ever wished you had a virtual assistant that could handle repetitive tasks, make smart decisions, and respond like a pro—all without needing constant input? That’s exactly what AI agents do. From automating customer queries to optimizing workflows, these intelligent systems are becoming an essential part of software development.

But if you’re new to AI, the idea of building one might sound like a lot to take in. The good news? You don’t need to be an expert in machine learning to get started. With the right approach, even beginners can build AI agents that solve real problems.

In this Blog, we’ll walk you through the basics, breaking everything down into simple, actionable steps. By the end, you’ll know:

- What AI agents are and how they work

- The core components that make them tick

- A step-by-step approach to building AI agents

- The best tools and frameworks to make the process

Let’s get Started.!

What is an AI Agent?

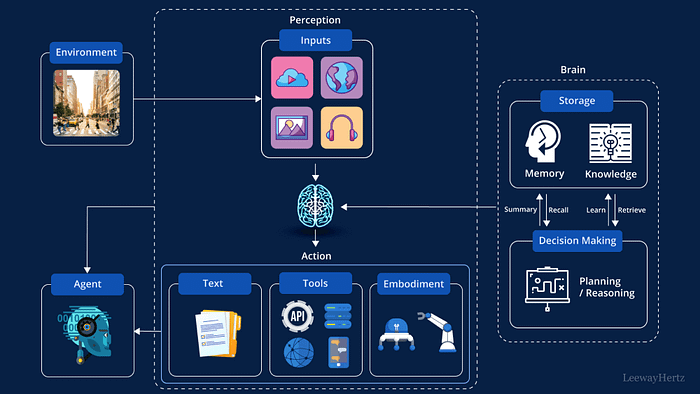

An AI agent is a software entity that perceives its environment, processes information, and takes actions to achieve specific goals autonomously. These agents can range from simple rule-based systems to complex learning models. They are designed to operate without constant human intervention, making decisions based on data inputs and predefined objectives.

What is an AI agent?

What is an AI agent?

Think of it like a self-driving car. It gathers data from sensors (like cameras and radars), analyzes the road conditions, predicts obstacles, and makes decisions in real time. Similarly, AI agents in software applications collect inputs, analyze patterns, and take actions to complete tasks.

Key Characteristics of AI Agents

-

Autonomy: AI agents operate independently, making decisions without human input.

-

Perception: They gather data from their environment through sensors or data inputs.

-

Reactivity: Agents respond to changes in their environment promptly.

-

Proactiveness: Beyond reacting, they can take initiative to achieve goals.

-

Social Ability: Some agents can interact with other agents or humans to complete tasks.

These characteristics enable AI agents to function effectively in dynamic environments, adapting to new information and challenges.

Building an AI Agent — The Basic Concept

Building an AI agent involves several steps, starting from defining the problem and collecting data to developing the agent and deploying it.

How to build an AI agent system?

How to build an AI agent system?

Here’s a basic outline:

- Define Objectives: Determine the goals and tasks the AI agent will perform.

- Gather Data: Collect relevant data the agent will use to learn and make decisions.

- Choose Algorithms: Select appropriate algorithms and models based on the complexity and requirements of the tasks.

- Develop the Agent: Implement the agent using programming languages and AI frameworks.

- Train the Agent: Train the agent using the collected data to learn patterns and make accurate decisions.

- Deploy the Agent: Deploy the trained agent in the intended environment for real-world applications.

- Monitor and Improve: Continuously monitor the agent’s performance and make necessary improvements.

Types of AI Agents

AI agents aren’t one-size-fits-all. Depending on their complexity and capabilities, they can be categorized into:

1. Reactive Agents – The Simplest Form of AI

- These agents operate based on predefined rules and do not store any past experiences.

- They react to inputs in real-time but cannot learn or improve over time.

- Since they lack memory, they are fast and efficient, making them idea tasks.l for simple, rule-based

Examples:

- Spam Filters – Email systems classify incoming messages based on fixed rules (e.g., certain words trigger spam detection).

- Basic AI in Games – Non-player characters (NPCs) that react to player actions in a predictable way.

- Autonomous Sensors – Devices that trigger alarms based on environmental changes, like motion detectors.2

2. Limited Memory Agents – Learning from Past Interactions

- These agents retain past data for a short duration to improve decision-making.

- They make adjustments based on recent inputs but do not continuously evolve over long-term experiences.

- Many AI systems today fall into this category since they combine real-time reactions with short-term learning.

Examples:

- Chatbots (e.g., GPT-powered bots) – These agents remember parts of a conversation to maintain context but reset when a session ends.

- Self-Driving Cars – They analyze past few seconds of data to predict lane changes, pedestrian movement, and traffic signals.

3. Goal-Based Agents – Evaluating Actions to Achieve a Goal

- These agents don’t just react but evaluate multiple possible actions before making a decision.

- They use search algorithms and decision trees to determine the best course of action.

- Unlike limited-memory agents, they actively consider future outcomes rather than just past interactions.

Examples:

- AI in Chess & Board Games – Programs like AlphaZero evaluate all possible moves before selecting the best strategy.

- Navigation Systems (e.g., Google Maps) – They calculate multiple routes and suggest the most efficient path.

4. Learning Agents

- These agents use machine learning and deep learning to continuously improve based on experience.

- They incorporate reinforcement learning, meaning they get better with feedback over time.

- Unlike limited-memory agents, they store large datasets and use past experiences to refine decision-making.

Examples:

- Netflix’s Recommendation System – Analyzes your viewing history to personalize suggestions.

- AI Assistants (e.g., Siri, Alexa, Google Assistant) – Learn user preferences and adapt responses accordingly.

Why AI Agents Matter

From virtual assistants like Siri and Alexa to AI-powered customer support bots, AI agents are shaping how businesses automate tasks and interact with users. They help companies scale customer service, optimize workflows, and even enhance decision-making.

Now that we know what AI agents are, let’s move on to how you can build one from scratch.

How to Build Agentic AI- 7 Steps Process

Building and training AI agents involves a systematic process of designing, developing, and deploying intelligent systems that can perceive their environment, learn from data, and make decisions to achieve specific goals.

This process typically involves several key steps, from defining the agent’s purpose to deploying it in a real-world setting. The following outlines the core stages involved in creating your own AI agent.

Step 1: Define the Purpose of Your AI Agent

Before jumping into coding, the first step is to clearly define what your AI agent will do. An AI agent without a clear goal is like a GPS without a destination—directionless and ineffective.

Ask Yourself These Questions:

- What problem will this AI agent solve?

- Who will use it, and how?

- What kind of input will it process? (Text, voice, images, etc.)

- What kind of decisions will it make?

- What level of autonomy does it need?

For example, if you’re building a customer support chatbot, its purpose might be to:

- Answer frequently asked questions.

- Direct users to the right support channels.

- Reduce response times by automating repetitive queries.

If you’re creating an AI-powered email sorter, it might:

- Categorize emails based on urgency.

- Detect spam and filter promotional content.

- Highlight priority messages.

Choosing the Right Type of AI Agent

Based on your answers, you’ll determine which AI agent type best fits your goal:

- Rule-based tasks? → Reactive Agent

- Short-term learning? → Limited Memory Agent

- Complex decision-making? → Goal-Based Agent

- Continuous learning? → Learning Agent

Once you’ve nailed down the purpose, you’re ready for the next step: choosing the right tools and frameworks.

Step 2: Choosing the Right Tools and Frameworks

Now that we have a clear purpose for our AI agent, the next step is selecting the right programming language, frameworks, and libraries. Your choice will depend on what your AI agent needs to do—whether it’s processing text, analyzing images, handling conversations, or making predictions.

Programming Languages for AI Development

The foundation of any AI agent is the programming language it’s built with. While AI can be developed in many languages, here are the most commonly used ones:

1. Python – The most beginner-friendly and widely used

Why? Python is easy to read and has a massive ecosystem of AI and machine learning libraries, making development faster and more efficient.

Best for: AI-powered chatbots, recommendation systems, predictive analytics, NLP-based agents, and deep learning applications.

2. JavaScript – Best for web-based AI applications

Why? If your AI agent will be embedded into a website or web app, JavaScript (with frameworks like TensorFlow.js) allows you to run machine learning models directly in the browser.

Best for: AI-powered chatbots, web-based assistants, and browser-based AI tools.

3. Java – Popular in enterprise AI applications

Why? Java is used in large-scale AI solutions, particularly in banking, healthcare, and corporate AI applications, thanks to its speed and scalability.

Best for: AI-powered fraud detection, automation tools, and enterprise-grade AI agents.

4. C++ – High-performance AI for real-time applications

Why? C++ is the go-to choice for performance-intensive applications, such as AI in gaming, robotics, and embedded systems.

Best for: AI-powered self-driving cars, robotics, and gaming AI.

AI Libraries and Frameworks

Once you’ve chosen a programming language, you’ll need specialized libraries and frameworks to power your AI agent. These tools help with natural language processing, machine learning, speech recognition, and more.

AI Tools for Natural Language Processing (NLP)

Used for chatbots, voice assistants, and text analysis.

- NLTK (Natural Language Toolkit) – Provides tools for tokenization, speech tagging, and text classification.

- spaCy – Faster and more efficient than NLTK, best for real-time NLP applications.

- Transformers (by Hugging Face) – Enables integration with pre-trained AI models like GPT, BERT, and T5, reducing the need to train from scratch.

Example Use Case: If you’re building a customer support chatbot, it will need NLP libraries to understand and process user queries.

AI Tools for Machine Learning & Model Training

These tools are essential for training AI agents to make decisions. If you’re wondering how to train AI agents, these tools enable the process by optimizing models, fine-tuning algorithms, and helping agents learn from data to make intelligent, autonomous decisions.

- TensorFlow – A powerful deep learning framework used for complex AI model training and deployment.

- PyTorch – More flexible than TensorFlow, used in AI research and advanced machine learning applications.

- Scikit-learn – Best for traditional machine learning algorithms like classification, regression, and clustering.

Example Use Case: If your AI agent needs to predict customer behavior based on past interactions, you’ll train it using Scikit-learn or TensorFlow.

AI Tools for Computer Vision

Used for image and video recognition AI agents.

- OpenCV (Open-Source Computer Vision Library) – Helps in object detection, image processing, and facial recognition.

- TensorFlow/Keras for Vision – Used for deep learning-based image classification and recognition tasks.

Example Use Case: If you’re building an AI agent that analyzes images (e.g., medical X-rays or security footage), OpenCV + TensorFlow will be your best bet.

AI Tools for Speech Recognition

Used for voice-based AI assistants.

- CMU Sphinx – A lightweight, offline speech-to-text tool.

- DeepSpeech (by Mozilla) – A deep learning-powered speech recognition engine.

- Google Speech-to-Text API – A cloud-based service for real-time voice recognition and transcription.

Example Use Case: If you’re building a voice assistant like Siri, it needs a speech recognition tool to convert spoken commands into text for processing.

AI Tools for Web-based AI Agents

Used for AI-powered web applications and chatbots.

- Rasa – An open-source chatbot framework with built-in NLP capabilities.

- Dialogflow (by Google) – A no-code/low-code AI chatbot builder.

- TensorFlow.js – A JavaScript library for running AI models directly in a web browser.

Example Use Case: If you want an AI-powered chatbot for a website, Rasa or Dialogflow would be the easiest way to implement it.

Pro Tip: If you’re new to AI, start with Python and Scikit-learn before moving on to advanced deep learning tools like TensorFlow.

Once you’ve selected the right tools, it’s time to feed your AI agent with data so it can learn and make smart decisions.

Step 3: Gathering Data

Once you’ve defined your goals and mapped out your process, the next step is to gather relevant data. This stage is about collecting both qualitative and quantitative data to fuel your process map. Here’s how to approach it:

- Identify Data Sources: Start by gathering data from internal systems like CRM, ERP, or spreadsheets, as well as external sources such as customer feedback, surveys, or industry reports.

- Gather Quantitative Data: Look for measurable metrics like sales numbers, response times, or production rates. These help pinpoint where bottlenecks or inefficiencies may exist.

- Collect Qualitative Insights: Talk to stakeholders such as team members, customers, or suppliers. These insights provide context to the numbers, helping you understand why certain outcomes occur.

- Ensure Data Accuracy: Make sure that your data is up-to-date and reliable. Outdated or incomplete information can lead to flawed insights.

By collecting the right data, you’ll be equipped to identify critical areas of improvement, which will guide the design of your future process improvements.

Step 4: Design the AI Agent

Now that you’ve identified the problem the AI agent will solve and have set clear objectives, it’s time to design the agent itself. This step involves creating a blueprint for how the AI will function, including the architecture, algorithms, and the user experience.

Here’s a breakdown of what this phase should involve:

- Choose the Right AI Model:

- Determine whether you’ll use a pre-trained AI model (like GPT, BERT, etc.) or build a custom model from scratch.

- Consider the complexity of the task and whether existing models can be adapted for your needs or if a custom-built solution is necessary.

- Design the Workflow:

- Outline the steps the AI agent will take to process user inputs and generate responses.

- Map out the interactions: what happens when the agent receives a query, how it processes the information, and how it responds.

- Think through different user flows and edge cases. How should the agent behave if it doesn’t understand something or encounters an error?

- Select the Right Technology Stack:

- Choose tools and platforms that will support the development of your AI agent. For example, you might use frameworks like TensorFlow or PyTorch for machine learning, or Dialogflow and Rasa for chatbot development.

- Select an appropriate API, programming language (Python, JavaScript, etc.), and data storage solution (SQL, NoSQL, etc.).

- Create the User Interface (UI):

- If your AI agent will have a visible user interface (such as a chatbot or virtual assistant), sketch out the user interface and user experience.

- Focus on making the interface intuitive and user-friendly. Design conversations that feel natural and ensure your agent can handle various inputs, including voice or text.

- Determine the Integration Points:

- Plan how the AI agent will interact with other systems or platforms (CRMs, databases, third-party services, etc.).

- Consider the data flow and security concerns, such as how data will be stored, accessed, and protected.

- Develop a Feedback Loop:

- Incorporate a feedback mechanism where users can provide input on the AI’s performance.

- This will be critical for improving the AI agent over time. Plan for regular updates and improvements based on user interactions.

By the end of this step, you should have a clear design of the AI agent that meets your objectives, a plan for development, and an understanding of the tools and technologies you’ll use. This design phase sets the foundation for building and training the AI agent in the next step.

Step 5: Develop the AI Agent

At this stage, you’re diving into the heart of your AI-driven incident management solution: developing the AI agent itself. This involves the creation of an intelligent, automated system capable of handling IT incidents swiftly and accurately, minimizing human intervention, and ultimately reducing downtime.

Here’s how to approach this crucial step:

1. Understand the AI’s Role in Incident Management

The AI agent you’re building must be capable of performing several tasks:

- Incident Detection: The agent should be able to detect incidents in real time by analyzing system alerts, logs, and user reports.

- Categorization and Prioritization: It needs to categorize incidents (e.g., software failure, hardware issue, network disruption) and prioritize them based on urgency, business impact, and available resources.

- Root Cause Analysis: The AI should dig into historical data, previous incidents, and logs to identify the root cause of recurring issues and even predict potential future incidents.

- Automated Response: In many cases, the AI should be able to resolve incidents autonomously (e.g., by restarting a service, patching vulnerabilities, or suggesting a solution).

- Escalation: When the agent cannot handle an issue independently, it must escalate the case to a human technician with relevant details to speed up resolution.

2. Choose the Right AI Models

The AI agent’s core functionality depends on selecting and training the right machine learning models. You’ll need to decide on a combination of models that best suit your incident management needs:

- Supervised Learning: For tasks like classification (e.g., categorizing incidents) and prioritization.

- Unsupervised Learning: To detect anomalies and potential issues in system behavior before they escalate into full-blown incidents.

- Natural Language Processing (NLP): If your agent needs to interact with users or technicians, NLP models can help it understand and generate human-like responses.

- Reinforcement Learning: For optimizing decision-making based on feedback from the environment. It’s useful for the AI agent to “learn” the most effective ways to handle certain types of incidents based on past actions and outcomes.

3. Integrate with Existing Systems

For your AI agent to be effective, it needs to integrate smoothly with your existing IT infrastructure:

- Monitoring Systems: The AI must be able to pull data from tools like New Relic, Datadog, or Nagios for real-time system monitoring.

- Ticketing Systems: It should integrate with platforms like Jira or ServiceNow to automate ticket creation, tracking, and resolution.

- Knowledge Base: The AI should have access to an internal knowledge base or FAQs to help it suggest solutions for common incidents without escalating to human teams.

4. Data Collection and Preprocessing

A robust AI agent relies on high-quality data. The more historical incident data you have, the better the agent will perform. Begin by gathering:

- Incident Logs: System logs, past tickets, and reports on previous IT incidents.

- Resolved Incidents: Historical data on how previous issues were resolved, including time-to-resolution and solution effectiveness.

- System Metrics: Data on system performance, downtime incidents, and load metrics.

Once collected, the data needs to be cleaned, structured, and labeled. For instance, categorize incidents based on severity and resolution steps. This step ensures that the agent is trained with accurate, actionable data.

5. Create a Knowledge Base

A knowledge base is essential for the AI agent to make informed decisions. You can use machine learning to automatically create knowledge from incident resolution data or manually populate the knowledge base with common issues and resolutions.

The knowledge base should include:

- Troubleshooting steps for common incidents.

- Guidelines for incident severity and priority levels.

- Standard Operating Procedures (SOPs) for escalating complex cases.

- Best practices for minimizing incident recurrence.

6. Train the AI Agent

Using the prepared data, you can now begin training the AI models. This step involves feeding the AI historical incident data and tuning the model to recognize patterns. The AI agent will learn to:

- Identify patterns in incidents: For example, recognizing that system downtime often correlates with a specific server failure.

- Predict outcomes: Based on patterns in previous data, it can start to predict incident outcomes and suggest corrective actions.

You can start with a small, controlled dataset to validate its performance and gradually scale as confidence in its accuracy grows.

Step 6: Test and Iterate

No AI agent is perfect out of the gate. You must test its performance in a controlled environment before full deployment:

- Simulated Incident Testing: Feed the AI simulated incidents to see how it handles them, and measure its ability to classify, prioritize, and resolve them.

- Human-in-the-Loop (HITL) Testing: During initial deployment, allow human technicians to review and intervene when the AI escalates incidents to them. This feedback loop will help you fine-tune the system.

- Continuous Learning: As new data comes in and more incidents are resolved, ensure the AI agent can learn from each case, improving its accuracy and performance over time.

Step 7: Monitor and Optimize

Once the AI agent is live, continual monitoring is crucial to ensure it performs well and adapts to new challenges:

- Track its incident resolution time: Are incidents being resolved faster than they were manually?

- Monitor accuracy: Is the AI correctly identifying the root cause of issues?

- Gather feedback from your team on how the AI agent’s recommendations and resolutions are impacting their workflow.

As your IT environment grows, your AI agent must be able to handle increased load and more complex incidents. Ensure the infrastructure around your AI agent can scale with the business needs.

Building an AI agent is a significant achievement. Now, ensure its success by implementing it strategically within your business.

Final Thoughts

Building AI agents is an accessible endeavor with the right guidance and tools. By understanding their characteristics and types, and following a systematic development process, beginners can create agents that automate tasks and make intelligent decisions.

As AI continues to evolve, mastering the creation of AI agents opens doors to numerous opportunities in various industries.

For more posts visit buzz4ai.in