Stitch, launched by Google I/O 2025 developer conference, an innovative AI-driven tool designed to revolutionize the way developers and designers create user interfaces for web and mobile applications. Powered by Gemini 2.5 Pro, Stitch aims to simplify UI design by transforming text prompts and reference images into functional, app-ready designs within minutes.

Image Credit : Google

Image Credit : Google

In this blog, we discuss all the details of Stitch like What is Stitch, Key features and comparison with other tools and its features for web and mobile app UI designs.

What is Stitch?

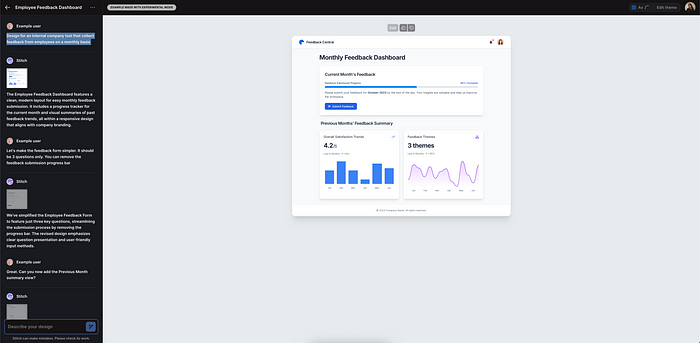

At Google I/O 2025, Google introduced a groundbreaking new tool: Stitch — a generative AI interface builder that allows you to go from a prompt or a rough sketch to a functioning prototype within minutes. Available under Google Labs at stitch.withgoogle.com, Stitch is an early preview of how AI is reshaping the design-to-development workflow.

Stitch can be prompted to create app UIs with a few words or even an image, providing HTML and CSS markup for the designs it generates. Users can choose between Google’s Gemini 2.5 Pro and Gemini 2.5 Flash AI models to power Stitch’s code and interface ideation.

Stitch lets users choose between Gemini 2.5 Flash and Gemini 2.5 Pro models | Image Credits : Jagmeet Singh / TechCrunch

Stitch lets users choose between Gemini 2.5 Flash and Gemini 2.5 Pro models | Image Credits : Jagmeet Singh / TechCrunch

Stitch arrives as so-called vibe coding — programming using code-generating AI models — continues to grow in popularity. There’s a number of large tech startups going after the burgeoning market, including Cursor maker Anysphere, Cognition, and Windsurf. Just last week, OpenAI launched a new assistive coding service called Codex. And yesterday during its Build 2025 kickoff, Microsoft rolled out a series of updates to its GitHub Copilot coding assistant.

Whether you’re a solo founder, UI/UX designer, or frontend developer, Stitch promises to revolutionize how we create interfaces by combining visual generation, code export, and design refinement in one fluid AI-powered environment.

Google I/O 2025 Highlights : All the Big News and Announcements at this year’s developer conference

Core Features of Stitch

- Text-to-UI Conversion: Transform natural language prompts into detailed UI designs.

- Image-Based Input: Utilize sketches or screenshots to guide design generation.

- Multiple Design Variants: Generate various styles and layouts for comparison.

- Frontend Code Generation: Produce functional code ready for integration.

- Export Options: Export designs directly into apps or to Figma for further editing

- Prompt-Based UI Generation : You can describe a UI in plain English — like:

Within seconds, Stitch will render a responsive design based on that prompt.

- Design from Images : Sketch something on paper, whiteboard, or even screenshot an app, upload it to Stitch, and it’ll generate a high-fidelity UI from that visual reference. It’s essentially design interpretation powered by multimodal AI.

- Live HTML/CSS Export : Stitch lets you copy clean, production-grade HTML/CSS. No messy inline styles or unusable artifacts.It’s export ready code you can paste directly into your project.

Understanding AI Models in Stitch

Google’s launch of Stitch at Google I/O 2025 marks a pivotal moment in the integration of artificial intelligence into app design. Stitch is powered by two distinct AI models, Gemini 2.5 Pro and Gemini 2.5 Flash, providing users the flexibility to choose model that best fits their needs. These models enable Stitch to transform text prompts and images into fully realized UI elements and generate corresponding HTML and CSS markup, simplifying the app design process .

At the core of Stitch’s functionality are the AI models that drive its interface and design capabilities. The Gemini 2.5 Pro and Gemini 2.5 Flash models serve distinct purposes: while the Pro model delivers comprehensive and nuanced design solutions, the Flash model prioritizes speed and efficiency, enabling faster iterations without sacrificing quality. This dichotomy allows developers to tailor their design approach according to project demands, balancing quality with speed as needed .

The choice of AI models within Stitch underscores the tool’s adaptability, offering options for developers whether they are focused on producing intricate, high-fidelity designs or need to rapidly prototype ideas. By selecting the appropriate model, users can streamline their workflow, ensuring that the output is both timely and creatively satisfying.

Exporting and Integration Capabilities

One of Stitch’s standout features is its seamless integration with popular design tools like Figma. Users can export generated UI assets and code directly into Figma, facilitating collaboration and further refinement. Additionally, the frontend code produced by Stitch can be integrated into various development environments, streamlining the transition from design to deployment.

Soon after I/O, Google plans to add a feature that’ll allow users to make changes in their UI designs by taking screenshots of the object they want to tweak and annotating it with the modifications they want, Korevec said. She added that while Stitch is reasonably powerful, it isn’t meant to be a full-fledged design platform like Figma or Adobe XD.

Stitch lacks the elements that could’ve made it a full-fledged design platform | Image Credits:Jagmeet Singh / TechCrunch

Stitch lacks the elements that could’ve made it a full-fledged design platform | Image Credits:Jagmeet Singh / TechCrunch

Google’s Jules AI Agent

Google’s Jules AI Agent represents a significant advancement in Google’s suite of AI tools, designed to enhance the efficiency and accuracy of the software development process. Introduced in the midst of a broader unveiling at Google I/O 2025, Jules has been made more accessible to developers through its expanded public beta launch. This AI agent stands out for its capacity to comprehend complex programming tasks, making it an invaluable resource for developers by automating bug fixes and managing coding backlogs.

In introducing Jules, Google is addressing a crucial need in software development: efficient and reliable debugging. By using advanced AI models, Jules can significantly reduce the time and resources spent on troubleshooting, thereby accelerating project timelines and reducing costs.

Jules currently uses Gemini 2.5 Pro, but Korevec told that users will be able to switch between different models in the future.

Public Reactions to Stitch and Jules

The developer community has expressed a mix of excitement and caution regarding Stitch and Jules. Many appreciate the potential for increased productivity and streamlined workflows, while others raise concerns about over-reliance on AI tools. Overall, the reception highlights a growing interest in AI-assisted development and design processes.

Future Implications of AI in App Development

The introduction of tools like Stitch and Jules signifies a shift towards more AI-integrated development environments. As these tools evolve, they are expected to further reduce the time and effort required for app design and coding, allowing developers to focus on higher-level problem-solving and innovation. This trend points to a future where AI plays a central role in the app development lifecycle.

Final Thoughts

Google’s launch of Stitch and Jules represents a significant step forward in AI-assisted app development. By automating complex tasks in UI design and code debugging, these tools have the potential to enhance productivity and creativity within development teams.

As AI continues to integrate into development workflows, tools like Stitch and Jules are poised to become indispensable assets for developers and designers alike.

For more posts visit buzz4ai.in

[…] Also Read: Stitch : Google’s AI-powered Web and Mobile App Designer Tool […]

[…] Also Read: Stitch : Google’s AI-powered Web and Mobile App Designer Tool […]